HotpotQA is a dataset for Diverse, Explainable Multi-hop Question Answering.

Style: Extractive-based

HotpotQA has 4 features, which can also be seen as challenges:

- questions require finding and reasoning over multiple supporting documents to answer

- questions are diverse and not constrained to any pre-existing knowledge

- contains two types of questions:

- 1.bridge

- 2.comparison (factoid comparision questions)

- provides sentence-level supporting facts required for reasoning

- introduces the strong supervision for reasoning and make the final predictions explainable

- (can form a reasoning chain through the support facts)

Reference papers on HotpotQA task:

- Cognitive Graph for Multi-Hop Reading Comprehension at Scale. ACL, 2019.

- Dynamically Fused Graph Network for Multi-Hop Reasoning. ACL, 2019.

- Answering while Summarizing: Multi-task Learning for Multi-Hop QA with Evidence Extraction. ACL, 2019.

- Compositional Questions Do Not Necessitate Multi-hop Reasoning. ACL,2019. (short)

- Multi-hop Reading Comprehension through Question Decomposition and Rescoring. ACL,2019.

Task Defination

Input:

- query

- $N_p = 10$ paragraphs

Output

- answer span

- supprot facts (sentence), which can be regarded as the supervised signal during training

Cognitive Graph QA

Cognitive Graph for Multi-Hop Reading Comprehension at Scale.

ACL, 2019.

Ming Ding (Chang Zhou)

THU (DAMO, Alibaba)

1.Motivation

Dual Process Theory from the cognitive process of humans:

- System1 first retrieve relevant information following attention via an implicit, unconscious, intuitive process

- efficiently provides resources according to requests

- System2 conduct explicit, conscious, controllable reasoning process based on the result of System1

- enable diving deeper into relational information by performing sequential thinking in the working memory

- slower but human-unique rationality

- For complex reasoning, two systems are coordinated to perform fast and slow thinking iteratively

This work:

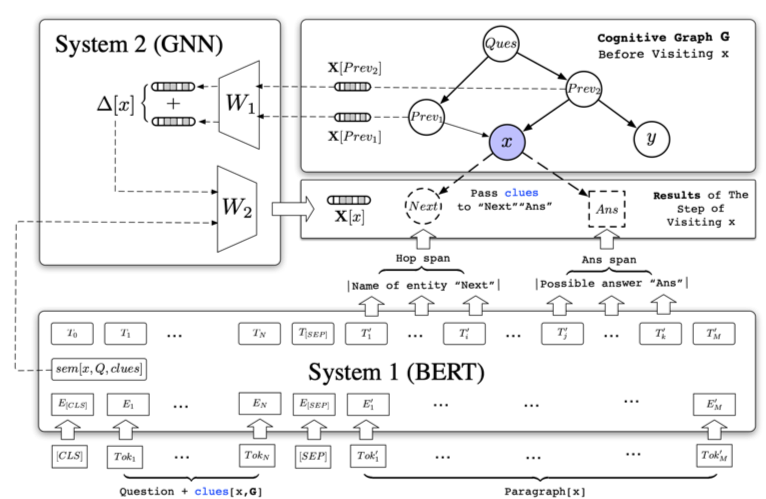

- System1 extracts question-relevant entities and answer candidates from paragraphs and encodes their semantic information

- Extracted entities are organized as a cognitive graph —> working memory

- System2 conducts the reasoning procedure over the graph, and collects clues to guide System 1 to better extract next-hop entities.

- iterate until all possible answers are found, and then the final answer is chosen based on reasoning results from System 2.

2.Model Details

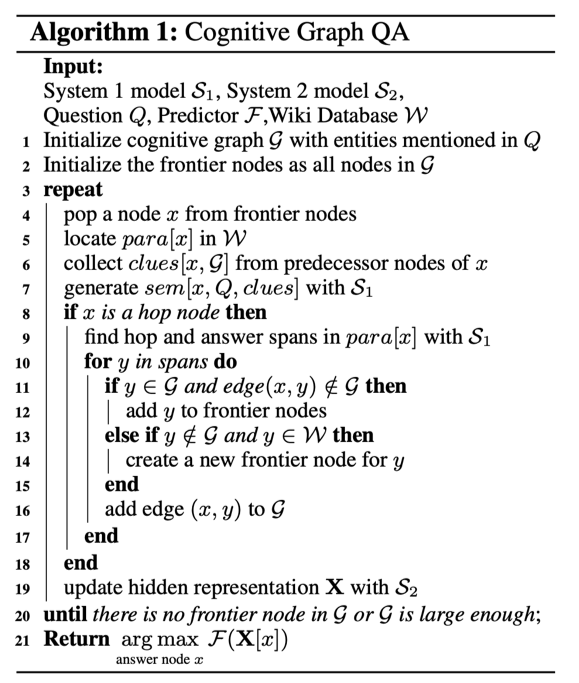

Algorithm of Cognitive Graph QA

Model Overview

3.Experiments

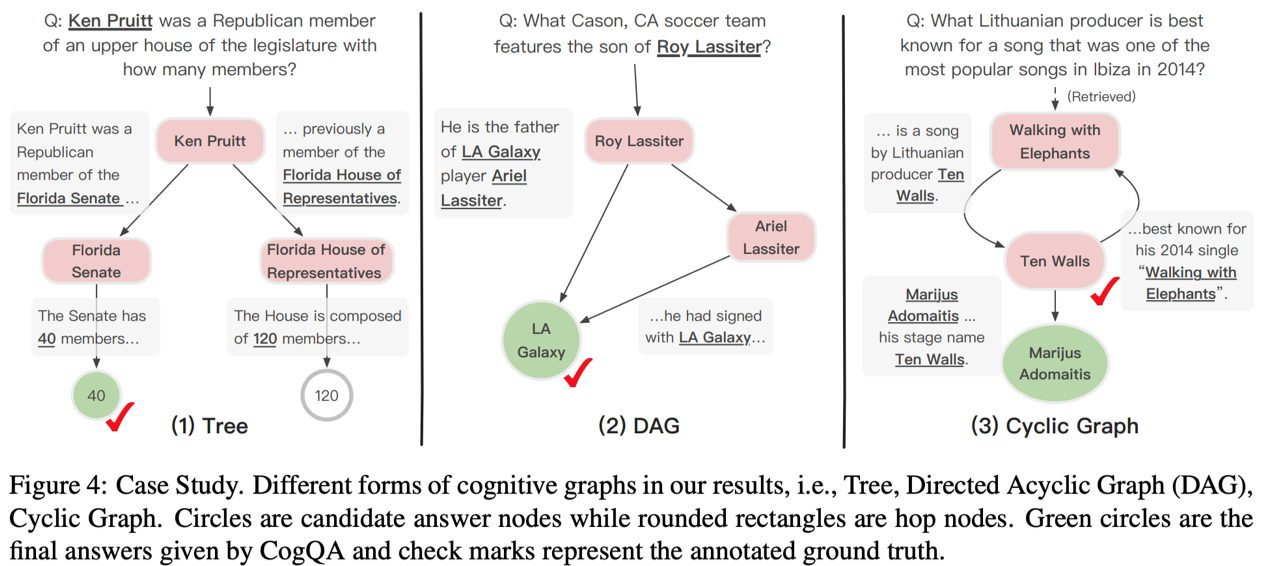

case study

Dynamically Fused Graph Network

Dynamically Fused Graph Network for Multi-Hop Reasoning.

ACL,2019.

Yunxuan Xiao

Shanghai Jiao Tong University. (ByteDance AT Lab)

1.Motivation

Challenges:

- 1) filtering out noises from multiple paragraphs and extracting useful information

- previous work: build entity graph from input paragraphs and apply GNN to aggregate the information

- shortcomings: static global entity graph of each QA pair —> implicit reasoning, lack of explainability

- 2) aggregate document information to an entity graph and answers are then directly selected on entities of the entity graph

- shortcomings: answers may not reside in entities of the extracted entity graph

This work:

- intuition: mimic human reasoning process in multi-hop QA

- start from an entity of interest in the query

- focus on the words surrounding the start entities

- connect to some related entity in the neighborhood guided by the question

- repeat the step to form a reasoning chain, and lands on some entity or snippets likely to be answer.

- consturcts dynamic entity graph

- propagating information on dynamic entity graph under soft-mask constraint

- bidirectional fusion:

- aggregate information from document to the entity graph and entity graph to document

2.Model Details

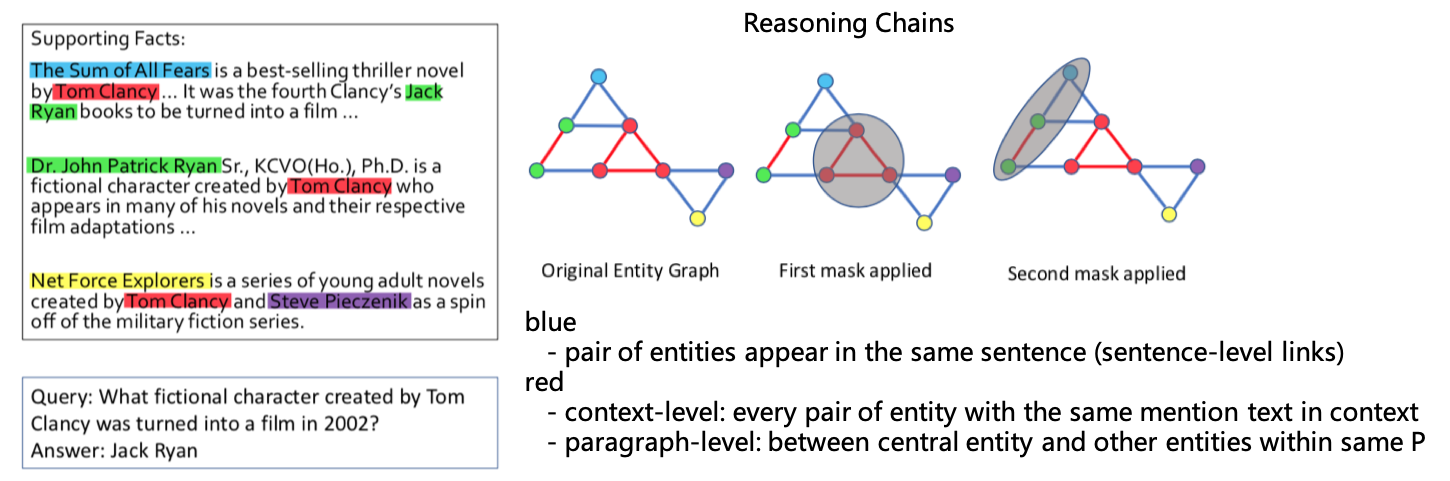

2.1 Graph Constructing

node: POL (Persion, Organization, Location) entities

edge:

- 1) sentence-level: co-occurence

- 2) context-level: coreference

- 3) paragraph-level: link with central entities extracted from title sentence of each paragraph

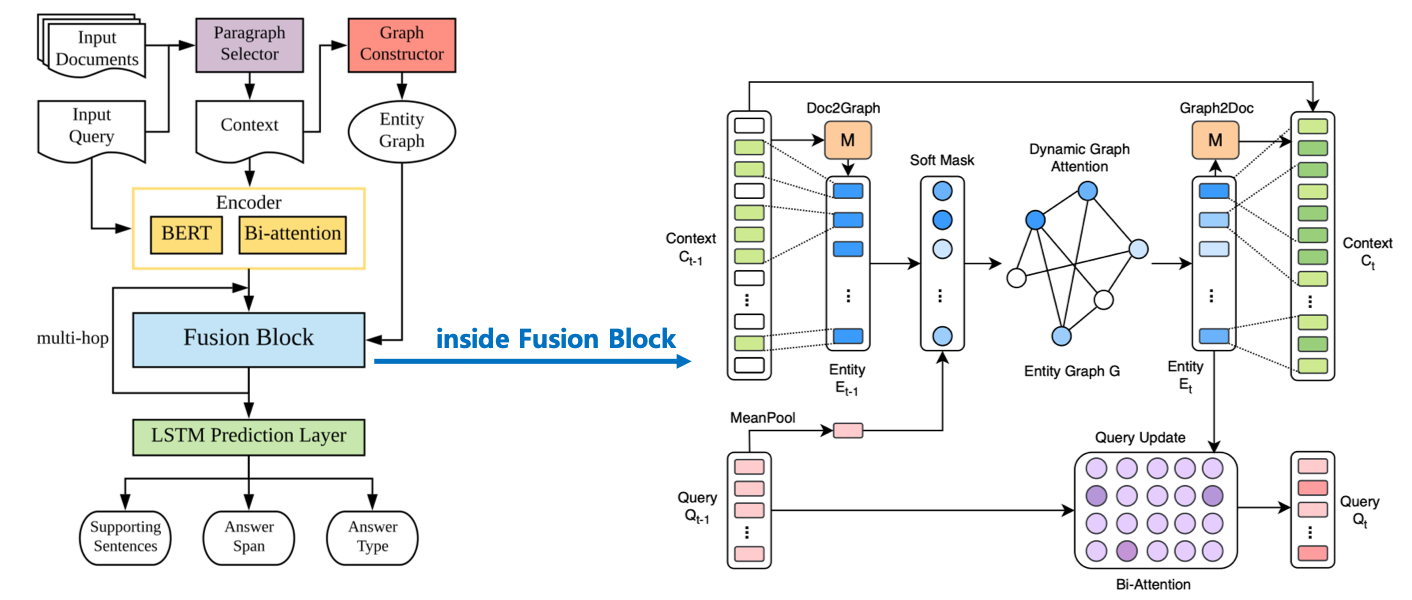

2.2 Model

Paragraph Selector

- use BERT sentence classification: [Q , Pi]

- concat all selected Pi —> C

Encoder

- use BERT encoding concatenating of query and context performs better

- output:

- query $Q_0 \in \mathbb{R}^{L\times d_2}$

- context $C_0 \in \mathbb{R}^{M\times d_2}$

Fusion Block

- document to graph

- binary Matrix $M \in \mathbb{R}^{M \times N}$ : get text span associated with an entity

- entity embedding: mean-max pooling $E_{t-1} = [e_{t-1,1},…,e_{t-1,N}] \in \mathbb{R}^{2d_2 \times N}$

- dynamic graph attention

- soft-mask: signify the start entities in the t-th reasoning step

- query vector $\tilde{q}^{t-1} = MeanPooling(Q^{t-1})$

- masked entity $\tilde{E}^{t-1} = [m_1^t e_1^{t-1},…]$

- propagate information in graph by GAT (the more relevant to the query, the neighbor nodes receive more information from nearby)

- $e^t_i = ReLu( \sum_{j\in B_i} \alpha_{j,i}^t h_j^t)$

- $E_t = [e_1^t,…,e_N^t]$

- $e^t_i = ReLu( \sum_{j\in B_i} \alpha_{j,i}^t h_j^t)$

- soft-mask: signify the start entities in the t-th reasoning step

- updating query

- $Q^t = BiAttention(Q^{t-1}, E^t)$

- graph to document

- issue: the unrestricted answer still cannot be back traced

- keep information flowing from entity back to tokens in the context

- the same matrix $M$

- update the context embedding

- $C^t = LSTM( [C^{t-1}, {ME^t}^T] ) \in \mathbb{R}^{M\times d_2}$

Prediction Layer

- 4 targets

- supporting sentences

- start position of answer span

- end position of answer span

- answer type

- use four LSTMs to get final representation

- $O_{sup} = F_0 (C^t)$

- $O_{start} = F_1([C^t, O_{sup}])$

- $O_{end} = F_2([C^t, O_{sup}, O_{start}])$

- $O_{type} = F_3([C^t, O_{sup}, O_{end}])$

- $L = L_{start} + L_{end} + \lambda_s L_{sup} + \lambda_t L_{type}$

3.Experiments

Query Focused Extractor

Answering while Summarizaing: Multi-task Learning for Multi-hop QA with Evidence Extraction

ACL,2019.

Kosuke Nishida.

NTT Media Intelligence Laboratories.

1.Motivation

2.Model Details

3.Experiments

DecompRC

Multi-hop Reading Comprehension through Question Decomposition and Rescoring

ACL,2019.

Sewon Min.

University of Washington. AI2.

1.Motivation

- decomposes a compositional question into simpler sub-questions that can be answered by off-the-shelf single-hop RC models

- inspired by the idea of compositionality from semantic parsing

2.Model Details

3 step process:

- decomposes the original, multi-hop question into several single-hop sub-questions according to a few reasoning types in parallel, based on span predictions.

- for every reasoning types D ECOMP RC leverages a single-hop reading comprehension model to answer each sub-question, and combines the answers according to the reasoning type.

- leverages a decomposition scorer to judge which decomposition is the most suitable, and outputs the answer from that decomposition as the final answer.